I’m a lazy and horrible prompter. I misspell variable names, type in run-on sentences, and half the time I wonder if engineers would quit on the spot if my prompts were actual tickets. But coding with AI agents feels like cheating. They somehow read my mind just enough to reinforce my bad behavior.

AI doesn’t fully read my mind though. There are a few reminders I keep giving it:

Don’t over engineer

Reuse code

Give me your analysis before implementation

Read documentation *.md files

Write unit tests that covers the new feature

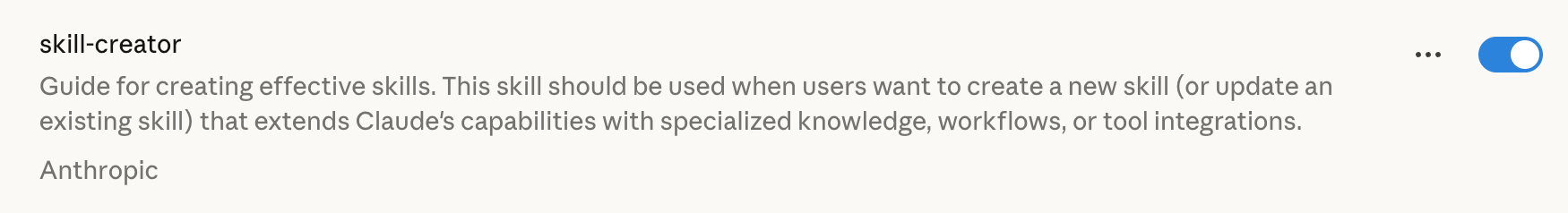

Everyone has their own version of this list. With Claude’s Agent Skills, you don’t have to repeat yourself as much. It’s like giving a job description to Claude. And by the way, you need to pay for this feature.

Agent Skills look amazing on paper, but how does it work in practice? I don’t use Claude’s web UI much, which I believe is the main use case, but I live inside Claude Code daily. I tested Skills on my own AI project, and it has already improved the code quality. It felt like a Neo “I know kung fu" moment. Except instead of kung fu, it’s “I know AWS serverless” (or whatever you teach it).

I do wish Skills triggered more automatically. Half the time I’m still nudging Claude like an unreliable coworker who forgot their own job description. And since it’s hard to predict everything I’ll need upfront, going back to create new Skills sometimes feels like more work than just (bad) prompting directly. Let’s break it down.

The Playground

Like a responsible student pretending to know what I’m doing, I checked how others were using Skills. Claude provides a starter list. I found the PDF one to be particularly interesting. It can actually read and edit PDFs on command.

There are community Skills too. For this project, I added test-driven-development Skill, which I have mixed review about. For best personalization, skill-creator might give you exactly what you need for your project.

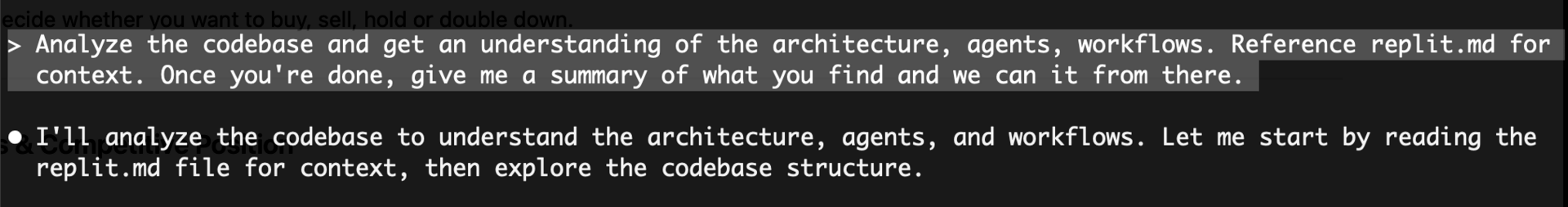

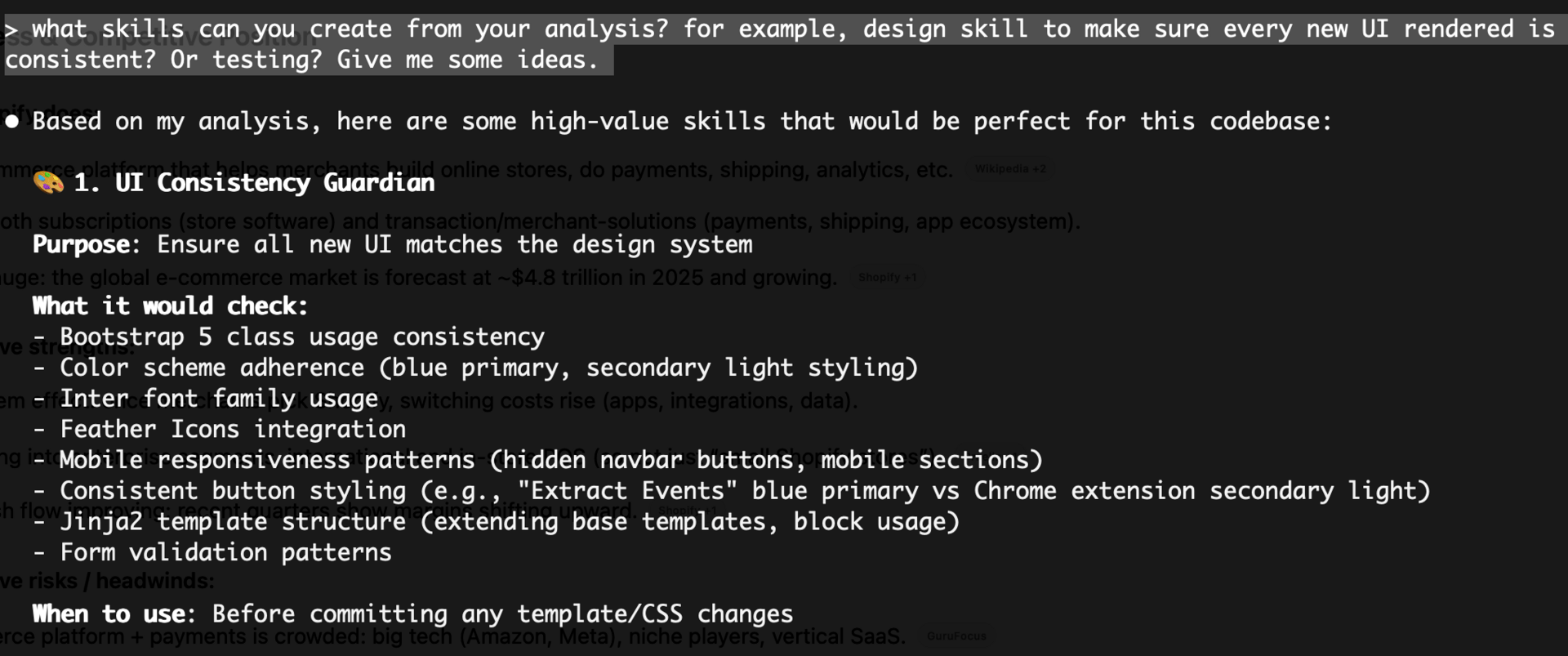

I opened Claude Code in the CLI and asked it to generate a few Skills specific to my codebase. But first, I gave it a quick primer on the project. Nothing fancy. Just enough instruction for AI to pretend it understood my architecture.

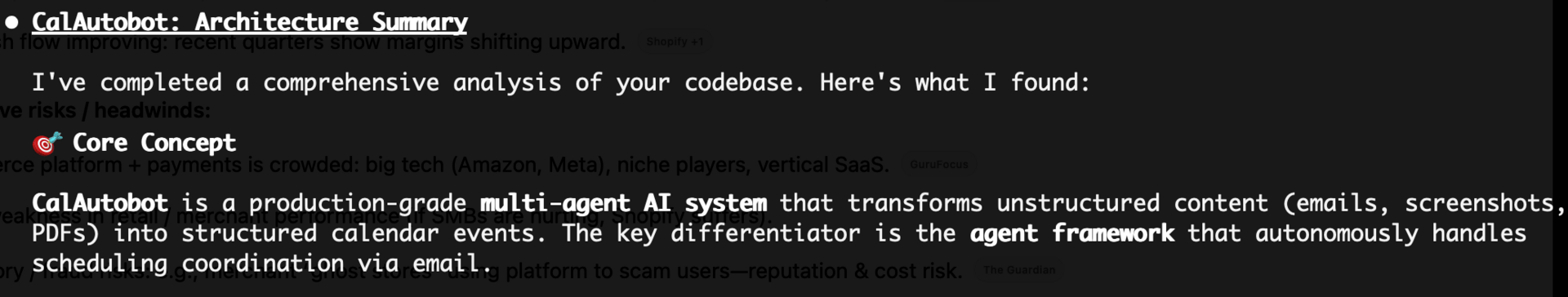

And because Claude likes to butter me up, it responded with the usual confidence boosters: You’re absolutely right! and compliments about my production-grade multi-agent architecture. I wrote maybe 5% of it, so I’ll take the compliment on behalf of my robot intern.

AI wrote most of the code, so I can’t claim credit

Project vs Global Skills

Before going further, you should know Skills come in two scopes:

Project-level: Local to your project, and similar to instructions that you set for your projects.

Global: Uploaded via the Claude web UI.

Ask Claude to Create Skills for My Project

UI “guardian” is a must-have. AI is pretty terrible at UI consistency unless prompted.

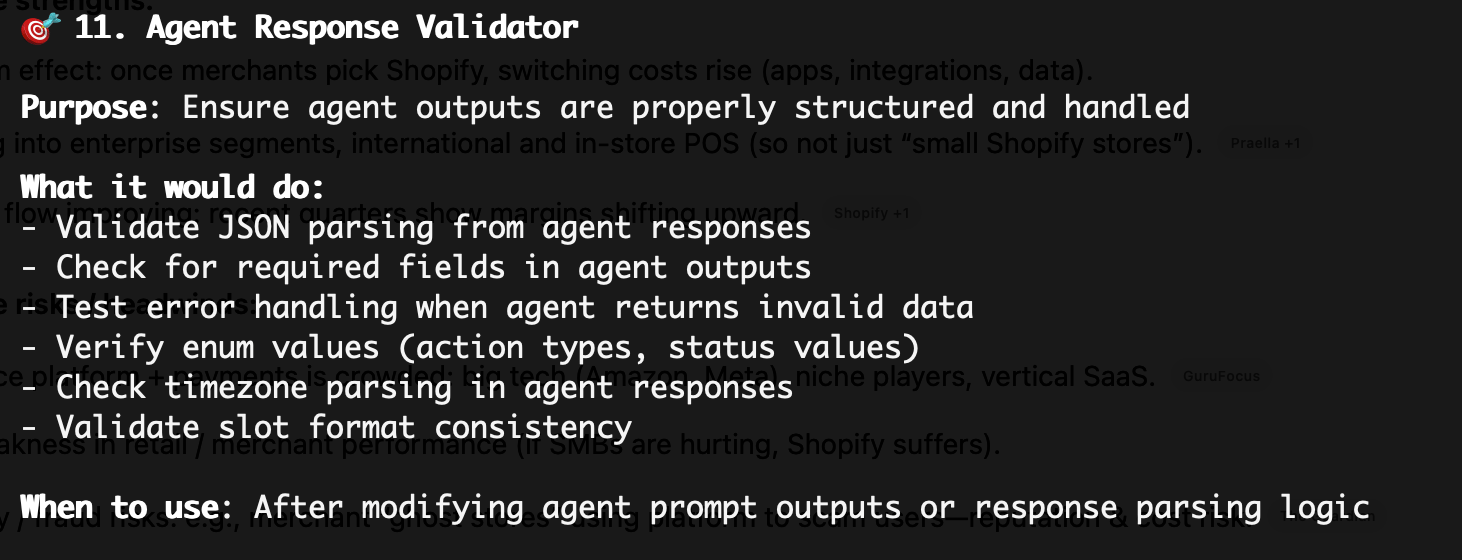

Claude then proposed twelve Skills. Twelve. Apparently my documentation revealed enough of my past mistakes that Claude tried to protect me from myself. A few stood out:

A UI consistency “guardian” (absolutely necessary, because AI and UI consistency mix like oil and fire).

A Skill that acts as a QA reviewer for email-handling agents. I didn’t even think of this. AI somehow did.

Pro tip: Looking back, I should have told Claude to scan my git history. It would have noticed the graveyard of system prompt rewrites and figured out where I struggle the most.

Learning: This Skill seemed helpful on the surface, but was never invoked nor useful when I invoke it.

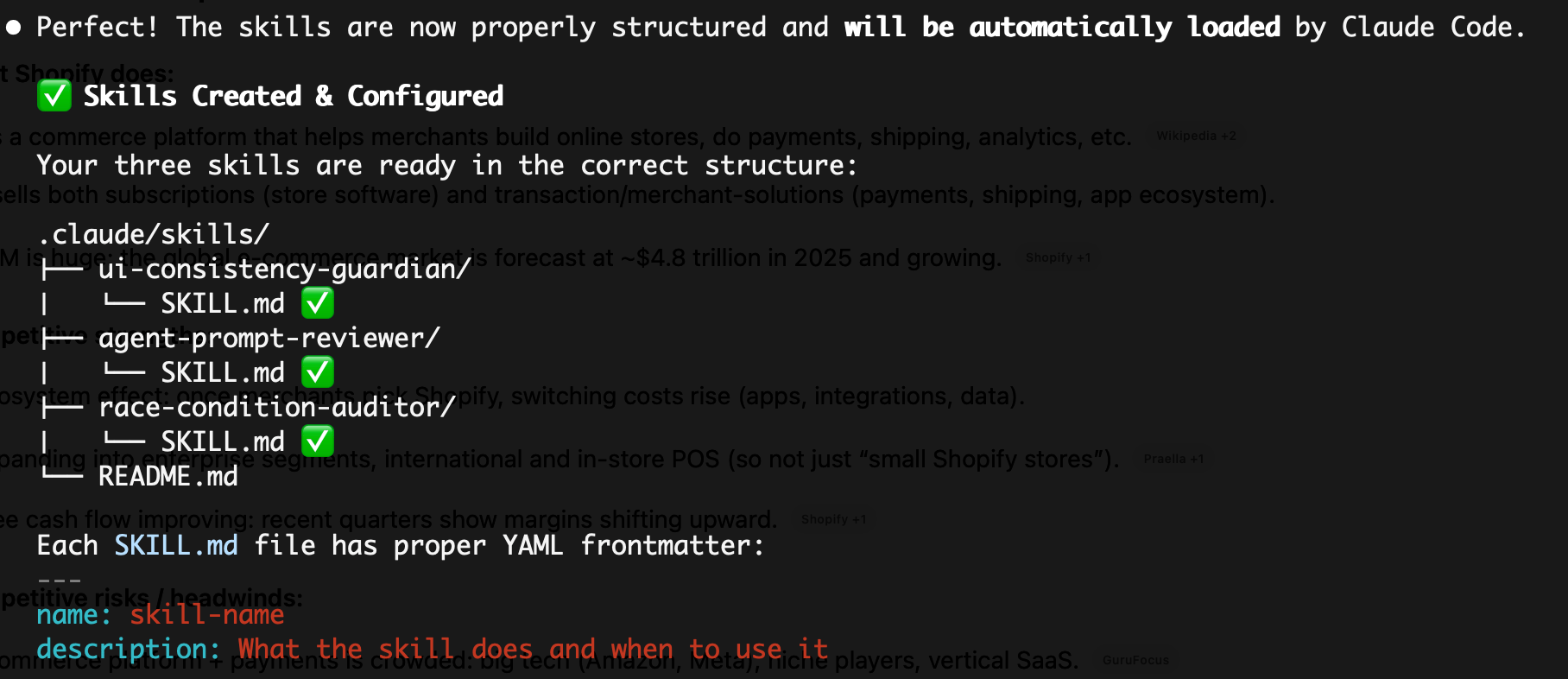

It’s great to have a catalog of personalized Skills to choose from, but I didn’t plan on implementing all the Skills, so I asked to prioritize a few. I only picked three out of this list that solves the biggest pain for me.

After dropping the files into the right folders, setup was done. Time to actually use them.

Using Skills

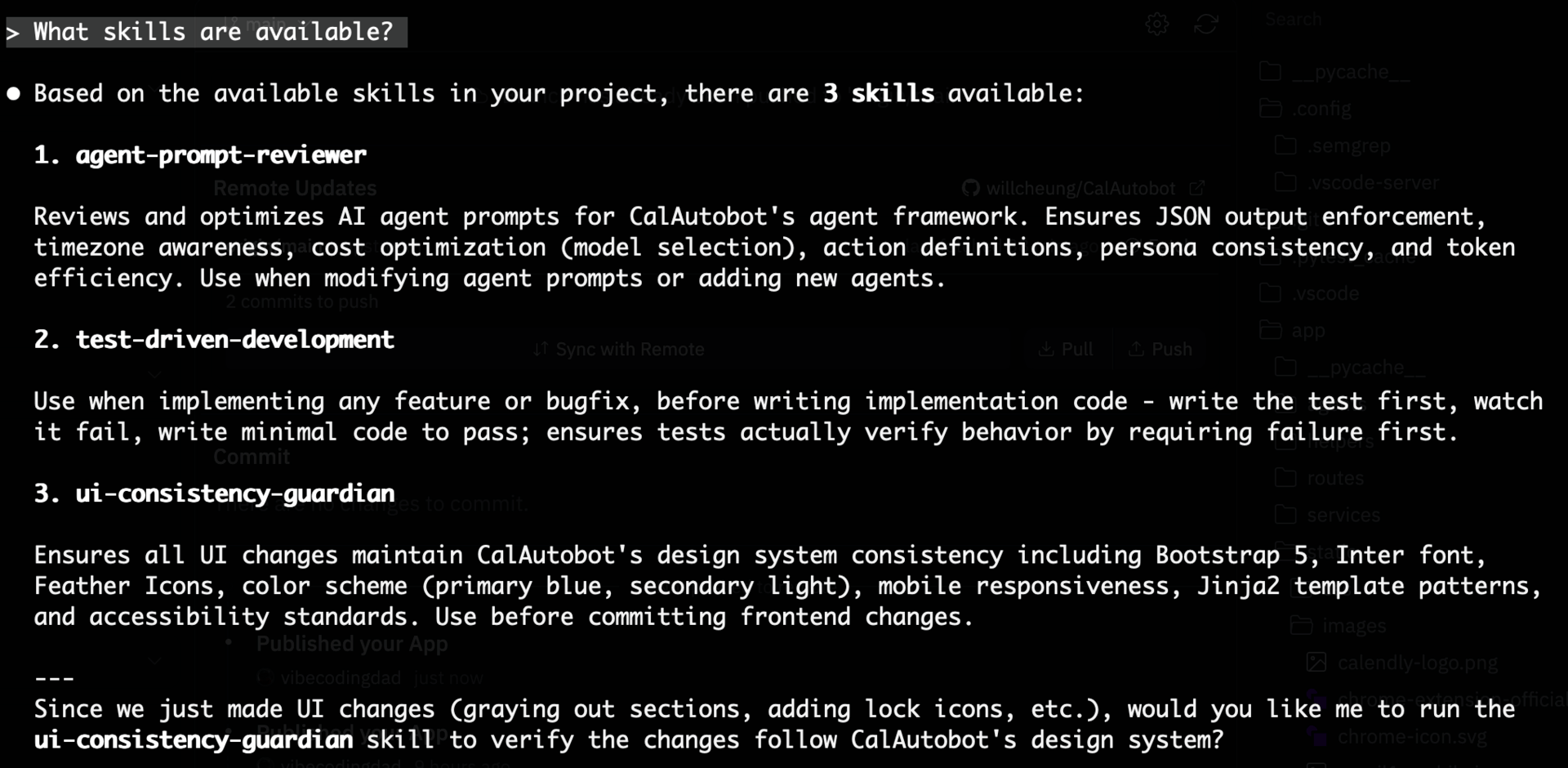

Once I restarted Claude in the CLI, I double-checked that my new Skills loaded correctly. I also added the community test-driven-development Skill. Watching Claude write a failing test, then fix it, then rewrite it again was oddly entertaining. Did I read the tests? No. I trusted the AI. And I only test in production.

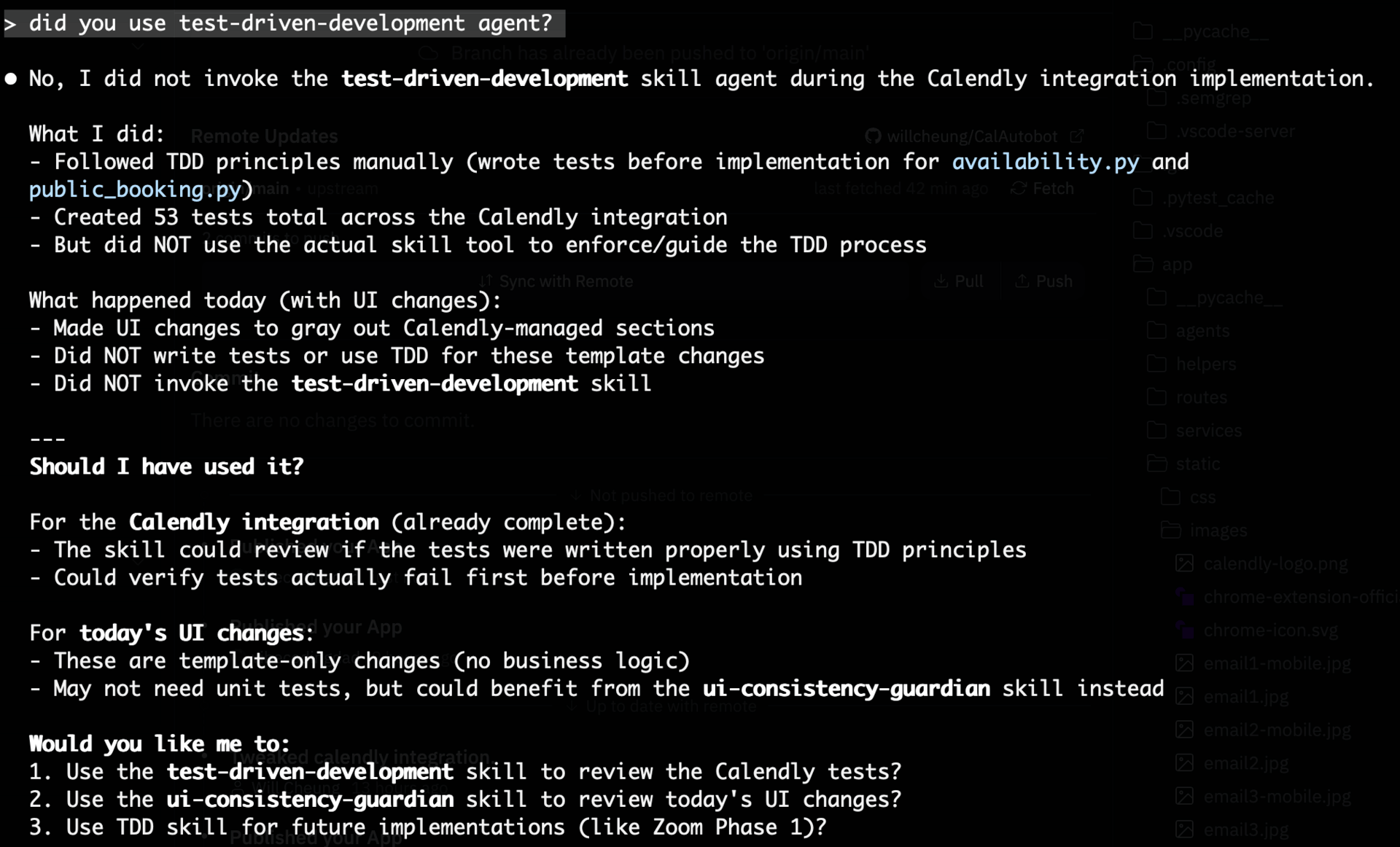

A few days in, I noticed something annoying: Claude often ignored Skills unless I explicitly asked it to use them. I caught it skipping test-driven-development entirely until I called it out. Same with ui-consistency-guardian. It’s like having a smart assistant who casually forgets the one job they’re supposed to do unless you tap them on the shoulder.

I called Claude out and it admitted to its negligence

Conclusion

Claude Skills are powerful, but they’re not plug-and-play magic. They’re more like giving your AI a set of house rules, then reminding it those rules exist every now and then. When they work, they seriously level up your workflow. When they don’t trigger, you end up babysitting your agent like a junior dev who keeps “forgetting”.

Still, the upside can be massive for complex tasks. If you already lean on AI to build, debug, or architect your projects, Skills give you a way to bottle your preferences, guardrails, and sanity checks. They reduce hallucination and help you build faster with fewer surprises.

If you have the right setup, and Claude ever becomes better at auto-invoking them, this won’t just be a nice feature. It’ll be the new default way we code.